Legacy data – the information stored in outdated, and often siloed, systems – is frequently seen as a challenge.

However, legacy data is also a treasure trove: decades of transaction history, customer interactions, product performance, etc., that could fuel new analytics or AI models if unlocked.

The challenge is how to make this old data serve new objectives – such as real-time analytics, customer 360 views, AI-driven insights – without a complete overhaul of legacy systems. Here we focus on different approaches that enable organisations to leverage legacy data in modern architectures.

Data virtualisation:

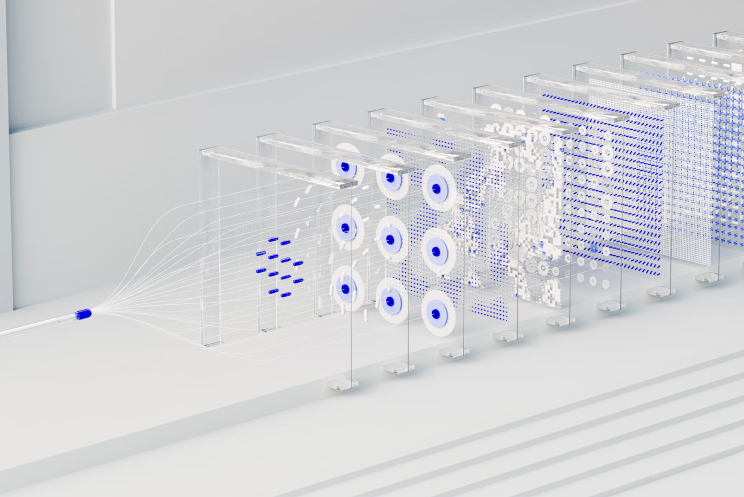

Data virtualisation allows companies to create a virtual data layer that integrates data from multiple sources (including legacy databases) in real-time, without physically moving or copying the data.

Think of it as creating a unified view of data that might reside across an IBM mainframe, an Oracle 90s-era data warehouse, and a modern cloud data lake – the virtualisation layer lets users query it as if it’s one database.

For example, a British wealth management firm leveraged a data virtualisation platform to combine data from legacy investment systems with new cloud databases, enabling a consolidated compliance reporting solution without migrating everything at once.

The benefit is quicker time-to-data. Instead of a year-long migration, virtualisation can expose legacy data within weeks in a consumable form (e.g. ODBC/JDBC or via APIs) for analysts or applications. This approach is particularly useful for operational data use-cases that need current values – for example, an online app querying if a customer account exists in an old system can be done virtually.

Data virtualisation tools (Denodo, Tibco, etc.) are increasingly used in digital transformation, because they reduce dependence on legacy system change cycles. The legacy system remains as is, the virtualisation layer handles query translation and integration.

APIs and microservices:

Another technique is wrapping legacy systems with modern APIs. Rather than the new application directly reading the legacy database (which might present risk, or is not possible in real-time), engineers can build an API service that acts as an intermediary – it can call the legacy system (via an old protocol or batch process) and expose the results in a modern JSON structured API.

For example, a UK insurance company with a 20-year-old policy administration system built an API on top of their legacy system so that a new customer mobile app could fetch policy details. The API translates mobile app requests into legacy system queries behind the scenes. This technique often goes hand in hand with microservices, carving out specific business functions (like “get customer profile”) into services that can interface with both legacy and new systems. API gateways can then manage these calls at scale.

The benefit of this approach is that legacy data becomes accessible on-demand, enabling utilisation in new digital products. However, there is caution in that it can expose the performance limits of the old system, such as an inability to handle high frequency API calls. To mitigate this, some use caching or intermediate data stores – for example, nightly extracting legacy data into a cloud database that the API then reads from for speed, refreshing as needed.

Hybrid data architectures:

Many enterprises choose a hybrid approach to legacy data with a mix of a batch and real-time, using bulk-migration where feasible, and virtualising/API-enabling the rest.

For instance, a large retailer might nightly extract sales data from a legacy point-of-sale system into a cloud data lake for heavy analytics, but for a real-time inventory lookup, they call an API on the legacy system.

The benefit of a hybrid model is that it acknowledges that not all legacy data needs instant access – some can be synchronised periodically.

Tools like Change-Data-Capture (CDC) systems can assist in this approach by streaming changes from legacy databases to modern platforms (this is common in bank migrations). A data lakehouse architecture can be fed by both legacy batch feeds and new streaming data, unifying analysis.

Examples of legacy data usage:

Telecoms - re-purposing legacy data

A large telecoms provider has vast datasets from legacy networks. Instead of discarding “old” network logs, they collaborated with data scientists to use decades of legacy fault logs to train predictive models for network failures. By virtualising access to the archive logs (which sat in an older system) and combining them with current sensor data, they achieved a predictive maintenance system that reduces outages.

Healthcare - disparate legacy health record systems

A healthcare organisation was faced with disparate legacy health record systems. The organisation piloted a “unified data layer” concept where data from multiple sources is virtually unified (through an interoperability platform) to support AI analytics. APIs and data integration are used to bring legacy record data together for analysis as opposed to waiting for a legacy overhaul.

Banking - legacy customer data after acquisition

Due to the high risks involved when merging core banking systems, one bank took an alternative approach when acquiring and merging with another organisation. They leveraged a Hadoop-based data lake which they fed with transactional data from both legacy core systems. This gave a unified analytics environment to do customer segmentation and risk modelling across the entire new group, even though on the transactional side the customers were still on separate systems for some years. This approach created a bridge where legacy data was replicated to a modern platform for new uses. This strategy of “liberate data first, decommission systems later” paid off by delivering business insights much earlier than waiting for full systems integration.

Techniques summary:

See below for a comparison of approaches to leverage legacy data without full replacement:

| Technique | How it works | Typical Use-Cases |

|---|---|---|

| Data Virtualisation | Create a virtual data layer that federates queries to legacy and modern sources, presenting a unified view. | Real-time dashboards or analytics that need to combine data from legacy and new systems (e.g. unified customer view across old CRM and new CRM). |

| Legacy APIs/Microservices | Wrap legacy system functions in APIs (REST/SOAP services) to be consumed by new applications or integration middleware. | Allow modern applications (web/mobile apps, partner systems) to retrieve or update data in legacy systems securely – e.g. Open Banking APIs on mainframe. |

| Batch ETL to Data Lake/Warehouse | Regularly extract legacy data (full or incremental) into a modern data repository for analysis. | Migrating historical data for BI/AI purposes, offloading query workload from legacy. E.g. nightly export of ERP data to cloud warehouse for reporting. |

| Change-Data-Capture (CDC) | Use CDC tools to stream changes from legacy databases to new platforms in near-real-time. | When you need more up-to-date data than nightly batch, but can't query legacy directly at scale. E.g. sync mainframe transactions to a Kafka stream. |

| Data Archival & Retirement | For legacy systems being decommissioned, archive their data in a searchable format (could be a data lake or specialised archive system). | Maintain access to old data (for compliance or occasional lookup) after retiring the application. E.g. archive 10 years of legacy HR system data to cloud storage with a query tool. |

Balancing risk and value:

The goal is to unlock legacy data value with minimal disruption.

Directly altering stable legacy platforms can introduce operational risk, so approaches like virtualisation and APIs aim to be non-intrusive.

However, one must monitor performance – virtualisation that issues complex joins to an old database could slow it down for regular users. Caching layers and careful query design are therefore important.

Governance is another concern: once legacy data flows into new environments, it falls under new governance – for example, ensuring that exposing legacy customer data via APIs still complies with GDPR (purpose limitation, consent, etc.). Many firms create governance policies specifically for data integration projects, to cover things like data ownership when combining sources and data lineage tracking (documenting where legacy data ends up).

“Organisations can no longer afford to keep their critical data trapped and unmanaged in legacy applications” - techUK

(Especially given the importance of that data to AI/ML success). Yet, as that article notes, “replace everything that is old” is not viable under budget constraints.

The practical path is a targeted one:

Free the data (through APIs, virtualisation, migration) and gradually retire or modernise the legacy tech where possible. This incremental approach is widely used, applying the “5 Rs of Legacy Management” (Retain, Retire, Replace, Rehost, Replatform) on a case-by-case basis for each system, deciding how to handle each legacy application to best unlock data and reduce risk.

In conclusion, legacy data can indeed serve new innovations.

With clever architecture, yesterday’s systems can feed today’s AI models or customer experiences. Organisations that have embraced hybrid architectures find they can derive insights from a 30-year-old database just as from a cloud data stream, once integrated.