Modern applications face a constant tension between competing architectural demands: systems must scale efficiently, remain highly available, perform well under load and be maintainable without excessive operational overhead.

This blog, adapted from a Tech Talk by Principal Software Engineer, Luke Mitchell, explores cases where serverless architectures can address these requirements by shifting infrastructure management to cloud providers, allowing development teams to focus on building features rather than managing servers.

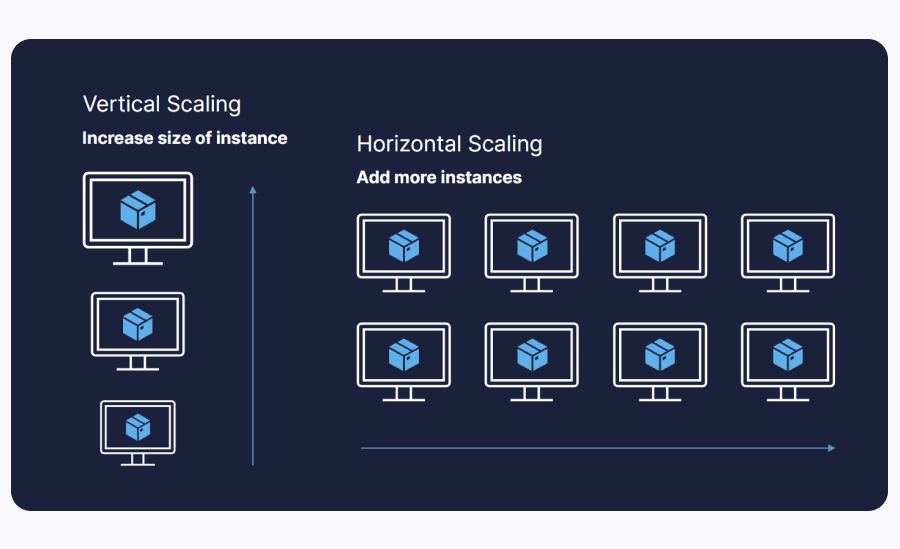

Scaling Strategies: Horizontal vs Vertical

Understanding scaling approaches provides the foundation for appreciating serverless benefits. Vertical scaling involves adding resources to a single instance - more CPU, RAM or storage. A down-side of this approach is that it is limited by a single point of failure. Meaning when that machine goes down, the entire service becomes unavailable.

Horizontal scaling takes a different approach by adding more instances of the same machine. This design provides built-in fault tolerance because multiple machines handle requests simultaneously. Therefore, if one machine fails, others continue serving traffic. This redundancy makes horizontal scaling more resilient than vertical scaling, though horizontal scaling can include more complexity in orchestration and load distribution.

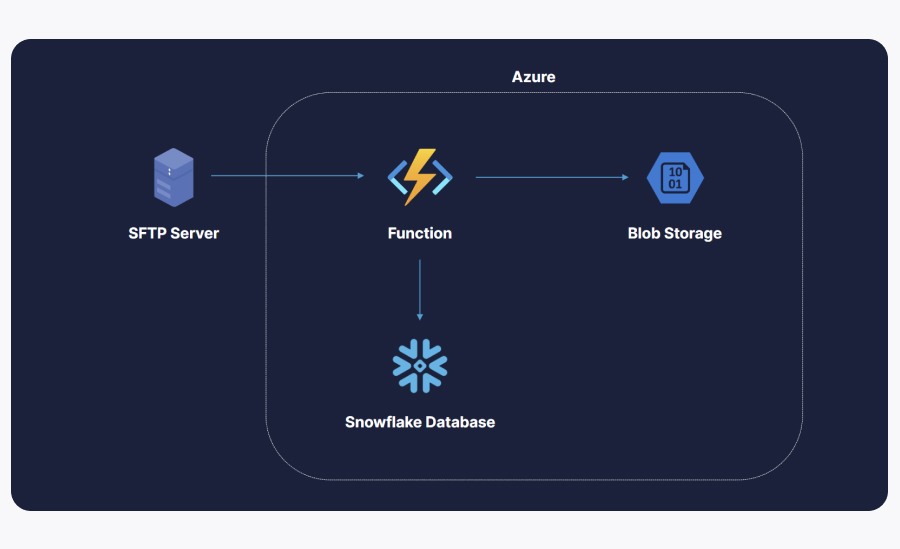

Case Study 1: From Monolithic Functions to Distributed Processing in Azure

The overhaul of this file processing system demonstrates how serverless patterns transform architecture. The initial implementation, as shown above, used a single Azure Function that continuously ran, grabbing files from an SFTP server, processing each entry sequentially and writing results to a Snowflake database. This design had several limitations: it scaled only vertically, created a single point of failure and left no clear recovery path when errors occurred mid-process.

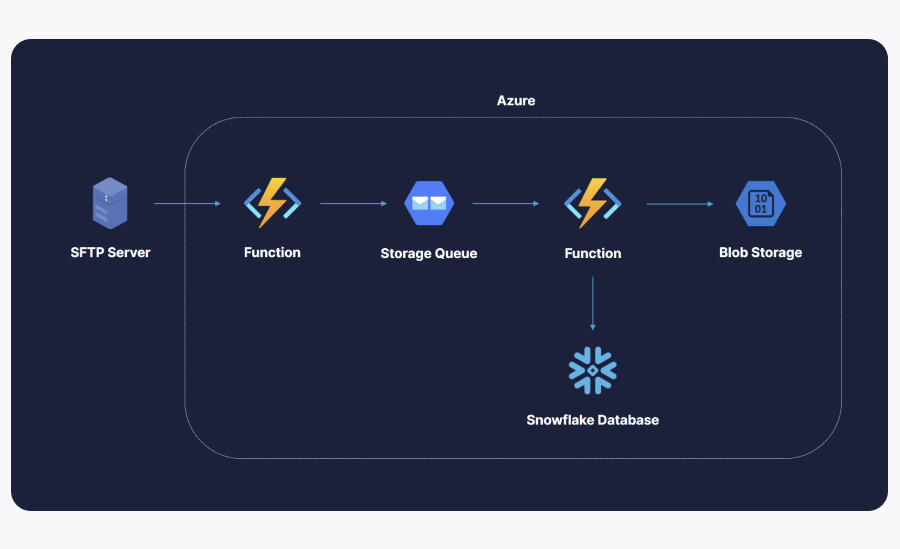

To mitigate these limitations the system architecture was refactored, as shown above – after which responsibilities were split across multiple components. The initial function now simply reads the file and splits each entry into individual messages on a storage queue. As messages arrive, a second function automatically scales up to process multiple instances in parallel. This distribution transforms sequential processing into concurrent execution, dramatically improving throughput speed.

As well as speed, the switch to a serverless architecture also improves fault tolerance. If a function instance fails mid-processing, the message automatically returns to the queue for retry. Messages that consistently fail move to a poison queue for manual investigation, preventing problematic entries from blocking the entire pipeline. Unlike the original architecture, the need to track processing state within files or implement complex restart logic is eliminated because the queue handles these concerns automatically.

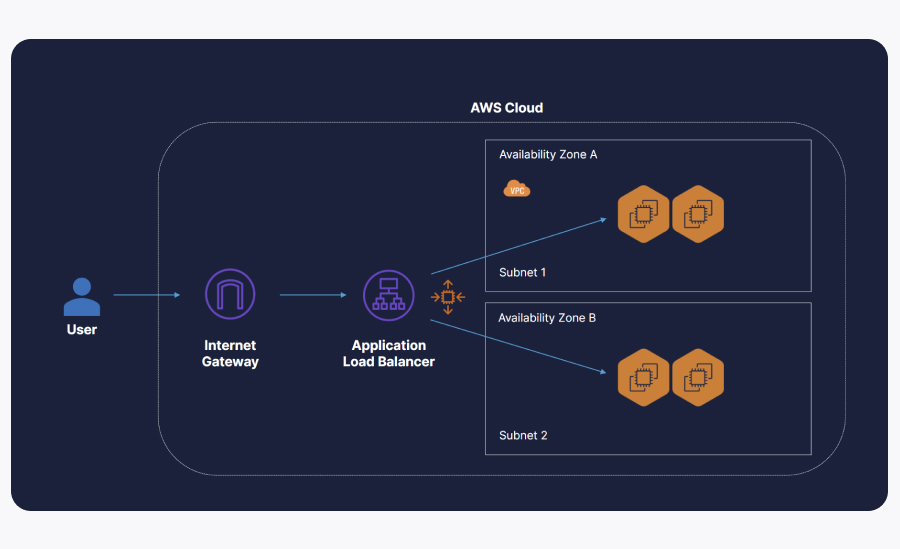

Case Study 2: Serving Static Content at Scale in AWS

Traditional web server architecture requires substantial infrastructure, as can be seen in the diagram above. Firstly, requests made by users are distributed by an application load balancer across EC2 instances deployed in multiple availability zones. Next, auto-scaling groups monitor traffic and adjust instance counts accordingly, adding capacity during peaks and removing it during lulls to control costs. Each virtual machine incurs a cost whether actively serving requests or sitting idle.

The serverless alternative simplifies this substantially. Static files reside in an S3 bucket, with CloudFront serving as the access point. CloudFront operates as a content distribution network with edge locations worldwide. When users request content, they receive it from the nearest edge location rather than travelling back to the origin region. This geographic distribution reduces latency significantly for global audiences.

This serverless approach has benefits for performance, maintainability and scalability:

- S3 stores files across multiple availability zones by default and if one zone becomes unavailable, requests route to files in other zones without manual intervention.

- CloudFront caches content at edge locations, reducing origin server load and improving response times.

- The entire stack scales to handle traffic spikes without configuration changes or capacity planning.

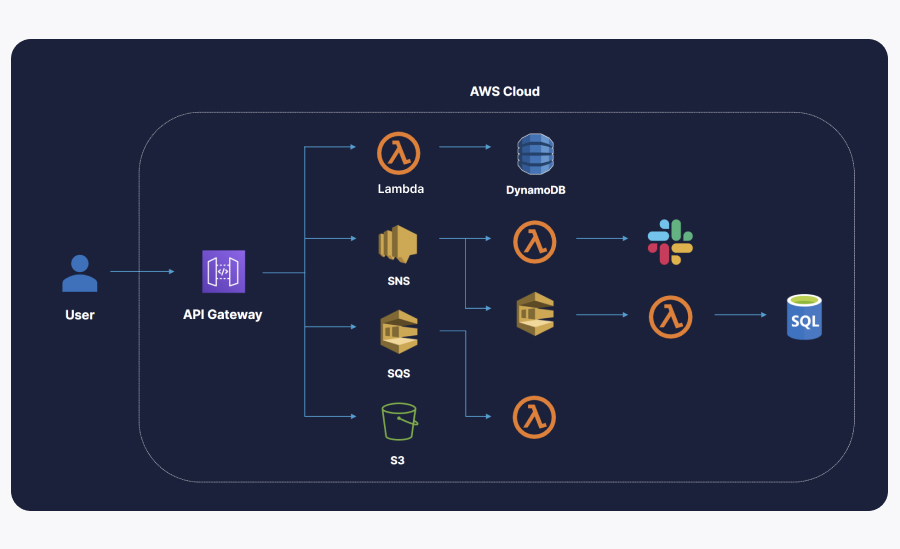

Case Study 3: API Infrastructure Without Servers in AWS

API servers typically follow similar patterns to web servers: virtual machines behind load balancers, deployed across availability zones for resilience. This infrastructure requires ongoing maintenance - operating system patches, image updates, capacity planning and monitoring.

API Gateway provides a serverless alternative, acting as a unified entry point for API traffic. It integrates directly with numerous AWS services: Lambda functions for compute, DynamoDB for database access, SQS for message queuing and SNS for publish-subscribe patterns. This integration flexibility enables varied architectural patterns without managing underlying infrastructure. This makes initial start-up easier than it would be using a virtual machine.

The publish-subscribe model through SNS demonstrates particular power. A single message can fan out to multiple subscribers - perhaps a Lambda function sending notifications to Slack while simultaneously queuing work for asynchronous processing. This pattern enables event-driven architectures where services respond to events without tight coupling between components.

This approach is also accompanied by benefits:

- Multi-availability zone deployment happens by default.

- The platform automatically handles failover and scaling without explicit configuration.

- Updates don't require creating new machine images or coordinating rolling deployments across instances.

- The pay-per-use model means costs align directly with actual usage rather than provisioned capacity.

Trade-offs and Considerations

Serverless architectures introduce their own considerations. For example, cold starts, the latency when a function first initialises, can affect user experience through lagging. This can be mitigated at an additional cost through provisioned concurrency (keeping a specified amount of Lambda expressions always running) to keep functions warm. This trade-off matters most for latency-sensitive applications where milliseconds count.

Additionally, cost efficiency should be considered, which depends on scale. Serverless platforms charge per request, making them economical for variable workloads. At extremely high sustained volumes, dedicated infrastructure may become more cost-effective. However, this typically occurs only at the scale of major internet services.

Some use cases still favour traditional servers. Such as long-running processes, which don't map cleanly to function execution models. For example, server-side rendering requires a server to generate HTML dynamically, which S3 and CloudFront cannot provide. Static site generation or pre-rendering can address some of these scenarios, but pure static hosting has SEO limitations without additional tooling.

A learning curve exists for both serverless and non-serverless approaches. To use servers, understanding load balancers, auto-scaling groups and virtual machine maintenance requires expertise. On the other hand, serverless architectures require different knowledge - message queues, function composition and event-driven design. Teams should evaluate their existing skills and strategic direction when choosing approaches.

Conclusion

Serverless architectures deliver meaningful advantages in scalability, performance, fault tolerance and maintainability. By abstracting infrastructure management, they enable teams to focus on application logic rather than operational concerns. While not universal solutions, they provide compelling benefits for most modern applications, particularly those with variable traffic patterns or limited operations resources. The examples demonstrate that serverless patterns often simplify rather than complicate architecture, delivering better results with less overhead.