Microservices continue to provide a competitive advantage for businesses who are looking for new effective digital solutions or to even add value to their existing services. In fact, figures from Market Research Future have shown that Microservice architecture adoption has a CAGR of 17%, with continual growth forecast over the next couple of years.

A Microservices story with Tequila at it's heart

To help tell you about the power of microservices, I would like to begin with a story. Our story centres on Russ, an entrepreneur who wants to break onto the alcoholic drinks market by selling his new tequila. To allow him to do this, he requires a website.

Enter software developer, Mark

Luckily for Russ, he knows just the man. He enlists his friend — a software developer, with years of experience, knowledge of the latest technologies and an enthusiasm to improve his skill — to help him build a website. Let’s call him Mark.

In collaboration, Mark and Russ built a website with a UI where customers could see the product and buy the product. When customers click the ‘buy’ button, the API will be notified and will begin doing some work so that this purchase can be completed.

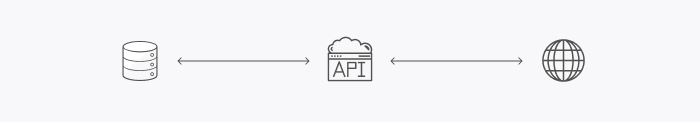

We’ll return to Mark and Russ’ business venture throughout this article, but for now, it’s worth noting that their approach is very common and is used by many organisations across the world. Often, there are no major problems and companies can get their website up-and-running without any roadblocks. A traditional setup would look something like this:

Traditional backend + frontend approach (Database/API/UI)

That being said, problems can still exist. In this article, principal consultant Ed Brown looks at what problems can arise with this approach, particularly, focusing on how this API can be improved using a microservice architecture.

API Problems

The first problem for any API is that they’re hard to upgrade.

On top of this, APIs are more expensive to scale. Let’s say you have a standard tier for your API on Microsoft azure, for which you pay £100 to host. If you wanted to scale this API, you would be required to pay an additional £100 for that transition.

They are also much less flexible to develop using different technologies. For example, if you were writing your API in .NET, you would not be able to mix JavaScript with .NET.

On top of its inflexibility, APIs pose messaging issues too. If your API is overloaded, and hasn’t been scaled up, it is likely that you would receive a 503 server error informing you that your server is too busy and unable to process your messages.

Solving API Problems

Returning to our original scenario, we see that sales of Russ’ tequila are doing well. Very well. Customers are loving the product, orders come flooding in and profit is growing. In short: business is booming.

While Russ is ecstatic with his newfound prosperity and success, Mark is beginning to get a little worried; he understands that the API might not be able to handle all this traffic. Thankfully, Mark recalls a talk that he attended on the power of microservices a few months back.

When a customer purchases the tequila, they are notified that their order is being processed.

On the backend, this looks really simple. You have your API and UI. Here we want to not only scale the API, but to make it future-proof. Ensuring this protection will mean that our API has the capacity to support many customer orders, both now and in the future.

Enterprises that are implementing microservices recognise the value that they can provide to these areas. Research by open-source platform Camunda shows that 50% of businesses are adopting microservices to increase application resilience and a further 64% for improving the scalability of their applications.

The first thing to do in this situation is to add an Azure API management. This service is a great way to create consistent and modern API gateways for existing back end services.

The service gives you the flexibility to have as many, or as few, APIs behind it as you wish. All of these APIs can then be grouped under one endpoint and, what’s more, they can be used to override individual endpoints. This proves useful for rerouting your endpoints away from your API.

If you have an ‘orders’ endpoint on one API, for example, using the API management, you can make it so that endpoint goes somewhere else, instead of to your API. The benefit of this process is that it allows you to streamline your operations and ensure that traffic doesn’t overwhelm your API.

You only have one API URL to configure, which is always useful for when you have a lot of configurations for your app.

API management also provides added control for the user of access per API. What this means is that no messages will reach any of your code unless it gets past API management.

Putting this into Practice

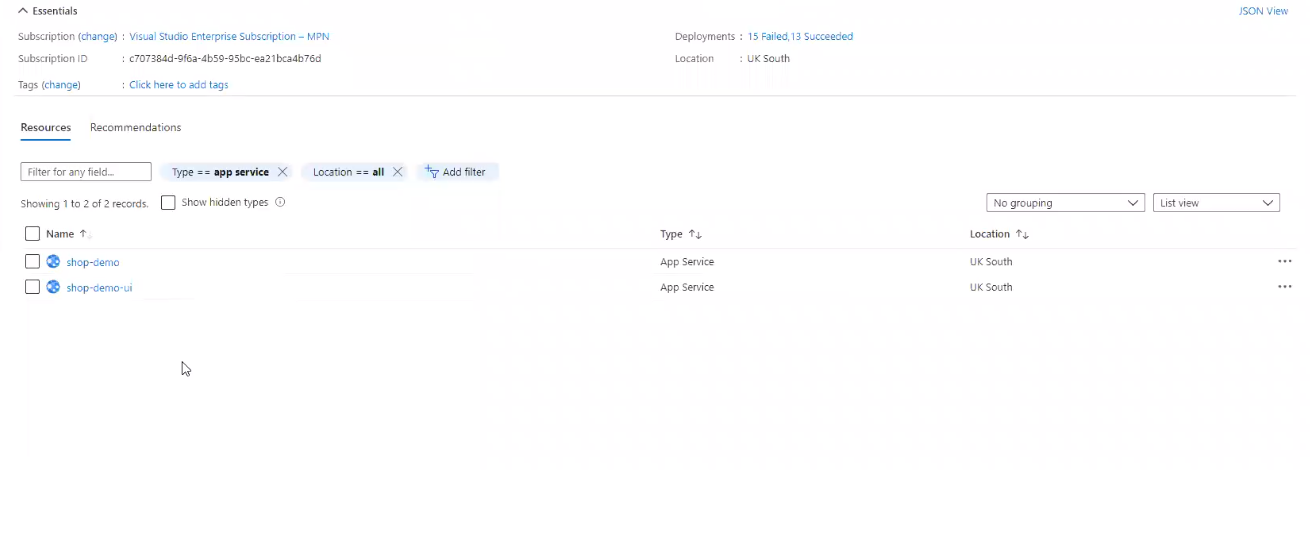

If we navigate to our API management resource, we will be shown a quota of our APIs. Selecting this item will bring us to our developer portal, where the API can be found.

Inside of the API will be a collection of requests that we could have. Now, Mark is a great developer and, like any great developer, he is also very ‘efficient’; he would not want to change any code or any configuration unless completely necessary. To achieve this balance, it is important for the API to have a custom domain. With this in place, you can remap your custom domain to your API management.

The beauty of setting this custom domain is that it allows you to put your API management in between the API and the UI without needing to change any further configurations. The API is able to use this custom URL without any issues.

Returning to our UI, we can again purchase a bottle of delicious tequila. The API works fine and the order begins to be processed through the API management resource. Success! But our work isn’t done just yet.

Azure Functions

Next, we want to create an Azure function.

What is an Azure Function?

This function is a serverless solution that allows you to write a small amount of code and maintain less infrastructure, all the while saving on costs.

An Azure function is highly scalable too, allowing up to 200 instances if used with a consumption plan and up to 100 instances for a premium plan. It’s worth noting that, for the consumption plan, you only pay for as much as you use.

For those with dedicated plans, azure functions allow for unlimited instances — depending on your infrastructure and what hardware you are using.

Both flexible and scalable, Azure function works with any language and you can create more than one function app. Say you have one function app in .NET, you can also have another in JavaScript. In this example, both apps would be able to communicate with one another and work simultaneously.

To ensure that your app is always performing highly, Azure functions also has many different triggers such as HTTP, Storage Queue and Event Hub.

So if we relocate back to the Azure portal, we can add a new function to our function app.

Functions > + Create > New Function (create title) > Create

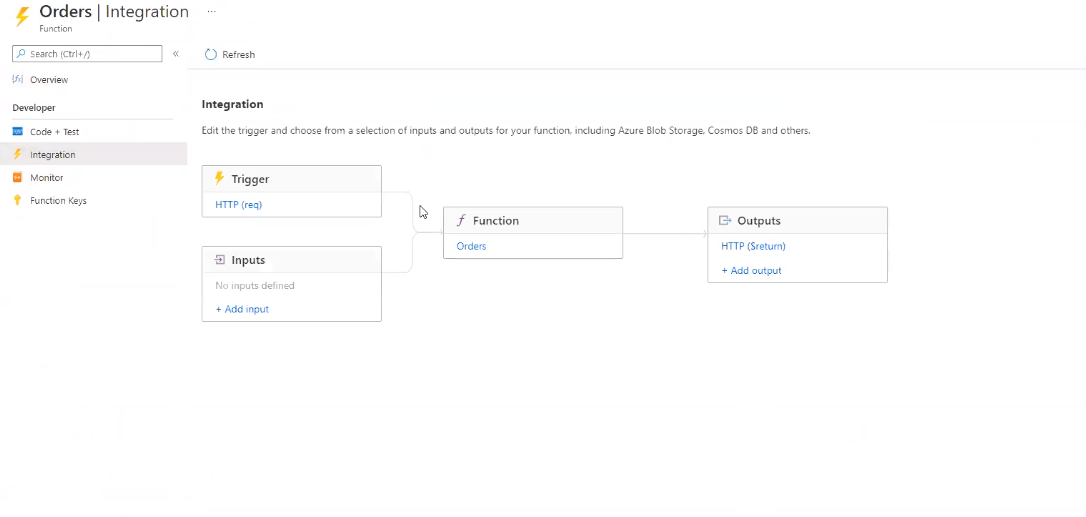

For this example we want to create a HTTP trigger; this will run whenever a HTTP request is received and will respond based on data in the body or a query string. Here we’re going to title the function ‘orders’.

It will take a couple of seconds to create the function, before it will show in the functions tab. Once your new function is visible, you can select the item. To edit the trigger, click on integration; for this ‘orders’ function, we only want to do a ‘post’ request, so we can untick the ‘get’ request under Selected HTTP methods.

Once the trigger is saved, we can return to our API management shop—demo, where we can import an API

API management service > API > shop—demo > Import > Function App > orders method > Import

After a few seconds, the function app should finish importing. ‘Orders’ now shows on our operations for our API where it can be used.

Crucially, we are using this API management to redirect traffic away from our API and onto our Azure function. What this means is that we can scale this request; every time someone clicks ‘buy’, it’s going to scale, which means that Mark and Russ can rest assured that no data (or customers) will be lost in the process.

Azure Storage Queues

On consumption plan, Azure functions can have up to 200 instances at the same time, which means that up to 200 people at the same time can make purchases and these will be processed successfully.

So what happens if you have more than 200 requests coming into your site? For several hundred more, this shouldn’t raise any issues, but for something more substantial, say, 1000 instances, there is a plan B.

What is an Azure Storage Queue?

Azure Queue Storage is a service for storing a large number of messages. Essentially, this allows users to get the messages that are coming from the UI, in this case the purchasing of some tequila, and place them in a queue until they’re ready to process.

What’s brilliant about the storage queue is that it can store messages of any format of up to 64 KB per message with the maximum size of a single queue being 500 TiB (454 TB). On top of this, the service comes equipped with message safety to ensure that the messages will stay in the queue even if the servers go down.

Even if one of the messages isn’t processed and an error is raised in the application, it will be designated to the ‘poison queue’, which means that it still won’t be lost. With this level of security, users can ensure that no data is lost.

Allocating a failed message to the poison queue will also prove useful for preventing issues in the future. If there is an item in the poison queue, for example, developers can easily share the message with colleagues to get their insight on possible solutions.

Setting up an Azure Storage Queue

To set up a queue, you need to, first, create a storage account. Once this is set up, select queues from the sidebar. Here you can begin to create your queue which, for our example, will be called ‘orders’.

Returning to the functions, you can now configure your messages in a way that means they are automatically added to the queue. What’s helpful, here, is that we can add an output that will allow us to include a storage queue in our function app.

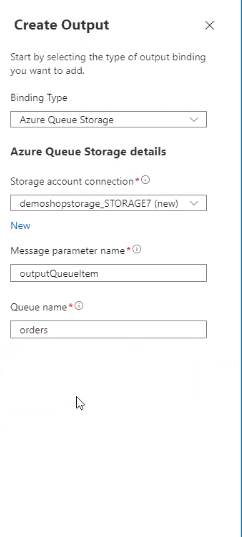

Orders > Integration > Outputs > + Add output > Storage account connection > Binding Type > New

From the dropdown menu, you can select Azure Queue Storage. Selecting this type will prompt you to further configure the details for the queue, which includes:

- Storage account connection

Find your storage account and select

- Message parameter name

It is worthwhile saving the parameter name as this variable can be important for using in later code.

- Queue name

In line with the title we set earlier, we will call this queue ‘orders’.

Once these parameters have been configured, simply click ‘ok’ and your output should be added.

using System.Net;

using Microsoft.AspNetCore.MvC;

using Microsoft.Extensions.Primitives;

using Newton.Json;

public static async Task Run(HttpRequest req, ICollector

outputQueueItem, Ilogger log)

{

log.LogInform( “C# HTTP trigger function processed a request. “);

string name = req.Query[“Name”];

string name = requestBody = await newStreamReader(req.Body).ReadtpEndAsync();

dynamic data = JsonConvert.DserializeObject(requestBody);

name = name ?? data?.Name;

outputQueueItem.Add(name);

string responseMessage = “Thank you for Ordering” + name + “! Your order is processed by

the Azure Function”;

return new OkObjectResult(responseMessage)

}

With some slight modifications to the original code, you can activate this queue and ensure that any upcoming messages will be added to the queue. So what can we do with a message once it shows in the queue?

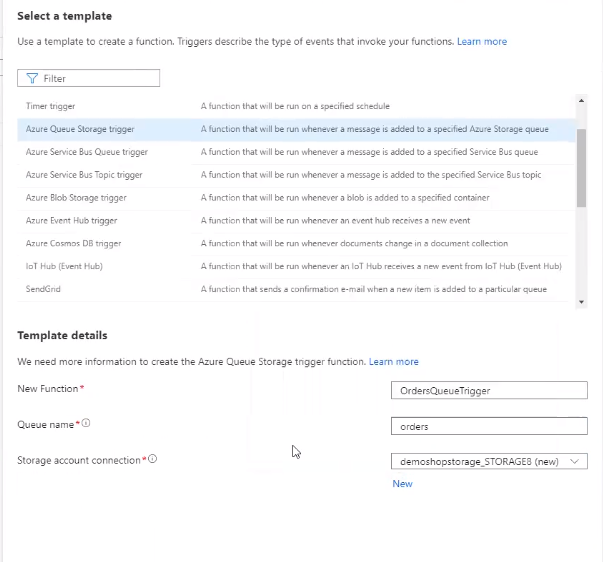

Let’s set up another trigger.

Functions > + Create > Azure Queue Storage trigger > title your New Function (‘OrderQueueTrigger’ in this example) > Queue name (orders) > Storage account connection (demoshopstorage_STORAGE8 (new) > create

Once the queue trigger has been created, it will begin processing messages straight away. Evidence of this can be found in the logs -

C# Queue trigger function processed

We've progressed from an API/UI infrastructure to a stage where, instead of using one API endpoint, we've overridden it to utilise a completely other resource — a function. We then add a message to the queue to ensure that we never lose it and that we can handle a large number of messages while processing them when our resources are available — all thanks to our storage queue trigger.

System Architecture

We used to have a pretty simple architecture that consisted of an API, a user interface, and a database. We now have a user interface, an API, API management (which communicates with both the API and the UI), and an order function.

After that, the orders function connects with the queue, which then communicates with the orders queue trigger. Furthermore, both the API and the trigger interact with the database.

While this is a little more complex, there are many benefits, especially when it comes to flexibility. The Orders function, for example, can scale massively, as can the Orders Queue Trigger.

Service Costs

Azure App Service - £136 per month

1 instance 2 cores, 3.5 GB RAM, 50 GB Storage

- 50 million requests

- 260ms per request

Azure Function App - £136 per month

- 190 million requests

- 400ms per request

Comparing the two applications, we can see that with the Azure Function App you can get almost four times as many requests within that month and each request can be a lot slower. By ‘slower’, we mean that each request can do a lot more than in the Azure App Service and still cost the same.

Of course, you might not feel that you need 190 million requests, under the impression that a request time of 260 ms will be sufficient. From this perspective, users could still save massively, as reflected in the below estimations:

Azure Function App - £22 per month

- 50 million requests

- 260ms per request

This is a massive decrease going from £136 per month, particularly, when considering the fact that the Azure App Service price is only for 1 instance. If you were to add another instance then you would, again, be billed £136.

The reason why the Function app is cheaper is because, unlike with a Service App where you have continuously running hardware, you only pay for what you use (in the consumption app). The moment you send a request to a function app, that is where it’s billed.

Azure Functions, on the other hand, scale automatically and only care about requests. Ultimately, how many requests you receive will be the deciding factor of your usage.

Conclusion

So what does all this mean for Russ, Mark and their tequila business? For one, they can be confident that their API is now able to support many customers coming through the site. With an Azure API management service installed, they will now have the ability to override individual endpoints, reroute these endpoints away from their API and, subsequently, ensure streamlined operations.

Having this capacity to manage traffic will also mean that their site is future-proof and ready to accept a growing customer base. Utilising their API management to, once again, shift traffic away from the API and towards our Azure function means that they can scale requests.

The creation of an Azure function reiterates this newfound scalability by empowering the website of up to 200 instances, when used with a consumption plan. And, should the business see a surplus of 200 instances, Russ and Mark can have peace of mind that their site can handle this traffic, thanks to their azure storage queue.

With this service, messages can be managed effectively; developers can queue all messages from the UI until they’re ready to process.

Using Russ and Mark’s scenario as a starting point, this article has shown how businesses can benefit massively by adopting microservices architecture. While the demo covered may look complex, I hope to have shown just a few of the many advantages that this setup can bring, particularly, in terms of flexibility, scalability and efficiency.

This article was originally delivered as a talk for the Leeds Digital Festival.

Audacia is a software development company based in the UK, headquartered in Leeds. View more technical insights from our teams of consultants, business analysts, developers and testers on our technology insights blog.

Technology Insights