What if AI models could fail over time without warning?

A study by researchers from MIT, Harvard, and Cambridge found that 91% of machine learning models experience performance degradation as conditions change, underscoring the critical need for proactive system management.

In the race to innovate, organisations can frequently prioritise speed over sustainability. While this approach delivers short-term wins, it often accumulates inefficiencies that stifle scalability, increase costs, and hinder future innovation. For AI and machine learning systems, technical debt extends beyond code to include complex dependencies in data, models, and operational workflows.

In this article, we explore the unique nature of technical debt in AI and machine learning, how it differs from traditional software engineering, and actionable strategies with MLOps (Machine Learning Operations) that can help to manage tech debt effectively.

What is Technical Debt in AI/ML Systems?

Technical debt refers to the inefficiencies and costs incurred when expedient, short-term solutions are prioritised over sustainable, long-term approaches. While technical debt is familiar in software development, it takes on unique forms in AI/ML systems due to their reliance on data, models, and complex workflows.

Unmanaged technical debt can lead to slower development cycles, higher operational costs, and reduced trust in AI/ML systems.

A Google Research study describes AI/ML technical debt as the “high-interest credit card” of technology, compounding inefficiencies over time and significantly increasing the cost of future innovation.

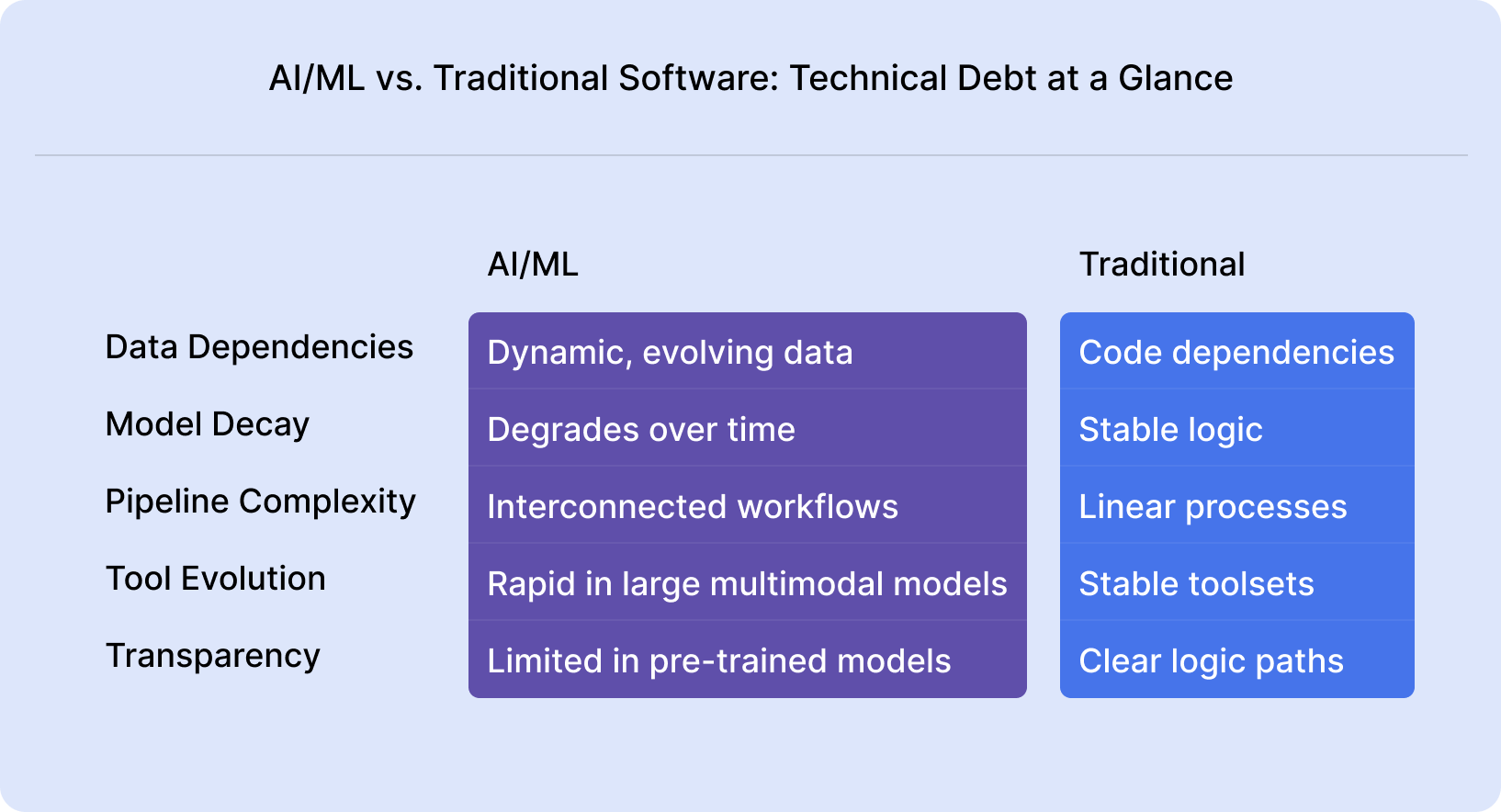

How AI/ML Technical Debt Differs from Traditional Software Engineering

While technical debt exists in all software systems, its nature is fundamentally different in AI/ML. These differences arise from the unique dependencies and workflows of AI/ML systems:

Key Differences:

- Data dependencies:

AI/ML: Relies on continuously evolving data. Changes in quality, structure, or distribution directly impact system performance.

Software Engineering: Based on code dependencies, with tools to perform static analysis and automatically review potential security vulnerabilities. - Model decay:

AI/ML: Models degrade over time due to concept drift, requiring regular retraining.

Software Engineering: Software logic remains stable unless actively changed. - Pipeline complexity:

AI/ML: Involves interconnected workflows across data ingestion, pre-processing, feature engineering, model training, and deployment. A failure in any stage can cascade across the system.

Software Engineering: Follows relatively linear workflows, with fewer interdependencies. - Evolving toolsets:

AI/ML: Modern models operate in a fast-changing ecosystem of frameworks and libraries, leading to frequent compatibility and maintenance challenges.

Software Engineering: Uses relatively more stable and standardised tool sets. - Black-box models:

AI/ML: Large pre-trained AI/ML technologies often have limited transparency and explicability, such as Claude or GPT4-o, making it harder to identify and trace inconsistencies.

Software Engineering: Systems, whilst potentially complex, have defined logic paths, making debugging and automated testing relatively simpler.

How MLOps Mitigates Technical Debt

MLOps (Machine Learning Operations) provides a framework for helping to manage the complexities of AI/ML workflows, reducing technical debt, and enabling scalable, maintainable systems. Some key practices:

Workflow automation:

Automating tasks such as data pre-processing, model training, and deployment reduces manual interventions that lead to inefficiencies, with tools such as Kubeflow or MLflow.

Version control and reproducibility:

Robust versioning of datasets, models, and code ensures consistency and reduces errors, with tools such as DVC (Data Version Control) and Git.

Real-time monitoring and maintenance:

Monitoring model performance helps detect and address concept drift early, triggering automated retraining workflows.

Modular pipelines:

Modular pipelines allow for component reuse, reducing duplication of effort and simplifying debugging. For example, breaking a pipeline into distinct modules for pre-processing, training, and deployment enables targeted updates.

Cross-team collaboration:

Standardised workflows foster alignment between data scientists, software engineers, data engineers and DevOps teams, minimising miscommunication.

Governance and compliance:

Centralised tracking of data lineage and model decisions supports compliance with frameworks, such as GDPR.

MLOps practices not only help to mitigate technical debt but also lay the foundation for long-term scalability and increased innovation.

The Case for MLOps

Adopting MLOps is not just a technical enhancement, it can directly impact a business’s ability to innovate, scale, and remain competitive. As AI/ML systems become integral to decision-making and customer engagement, the ability to manage these systems efficiently and effectively becomes more and more pertinent.

Cost savings through efficiency

Technical debt in AI/ML systems drives up operational costs, often forcing teams to spend disproportionate time on maintenance rather than innovation. MLOps introduces automation and standardisation that reduce inefficiencies across the development lifecycle.

By automating tasks like model retraining, monitoring, and deployment, MLOps minimises human intervention, reducing the risk of errors and lowering operational costs.

Faster time-to-market

In competitive industries, speed is critical. MLOps enables organisations to deploy AI/ML models faster and iterate more frequently, shortening the time between ideation and value delivery.

Modular pipelines and automated workflows enable simple transitions from development to production, reducing delays caused by manual interventions. Organisations leveraging CI/CD practices for AI/ML can deploy updates in significantly reduced timescales.

Improved model reliability

AI/ML systems often face performance drift, where model accuracy declines over time due to changes in data distributions or external factors. MLOps addresses this through continuous monitoring and proactive interventions.

Automated monitoring detects performance drift early, triggering alerts or retraining workflows before system failures occur. For industries relying on AI for mission-critical operations, such as healthcare or finance, reliable performance can be the difference between compliance and costly errors.

Scalability for long-term growth

As organisations scale their AI/ML initiatives, managing multiple models and workflows becomes increasingly complex. MLOps provides the infrastructure to scale without multiplying inefficiencies.

Reusable components allow organisations to replicate and scale workflows across teams and projects. With centralised tools for tracking and versioning datasets, models, and code, enabling scalability while maintaining governance.

Risk mitigation and compliance

AI/ML systems must meet stringent regulatory standards, especially in industries like finance, healthcare, and insurance. MLOps ensures compliance by providing transparency, traceability, and robust governance.

Centralised tracking of data lineage ensures datasets meet privacy regulations like GDPR. Whilst integrating XAI tools into MLOps pipelines enhances model interpretability, a critical requirement for compliance in high-stakes domains.

Enhanced collaboration

MLOps fosters collaboration between cross-functional teams by standardising workflows and providing shared platforms for development, testing, and deployment.

Data scientists, engineers, and DevOps teams work within a unified framework, reducing silos and miscommunication. With shared tools and processes enabling teams to focus on innovation rather than resolving operational bottlenecks.

Competitive advantage

Organisations that adopt MLOps early gain a significant competitive edge by being more agile, reliable, and innovative. The ability to quickly deploy and scale AI/ML systems allows these companies to outperform their peers in customer engagement, operational efficiency, and innovation.

Example: NatWest

NatWest Group faced significant challenges in scaling AI/ML systems due to technical debt in data pipelines and workflows. NatWest implemented an MLOps platform using Amazon SageMaker Studio, standardising their processes and automating repetitive tasks.

NatWest achieved a reduction of technical debt of existing models and the creation of reusable artefacts to speed up future model development.

This case demonstrates how MLOps enables businesses to address technical debt while driving innovation.

Challenges to MLOps Adoption

While MLOps offers significant benefits, adoption is not without challenges.

Considerations around upfront costs (as investment in tools and infrastructure can be substantial), skills gaps, scalability issues across teams and geographies, and clarification around ROI (particularly for smaller organisations) are all important to analyse in initial AI exploratory phases.

Conclusion

Technical debt, if left unchecked, in any kind of technology project, can derail even the most innovative initiative. MLOps offers a strategic framework for managing inefficiencies and helping to mitigate technical debt within AI and machine learning platforms, enabling scalability, and driving sustainable growth.