This blog post has been adapted from this Tech Talk by Chris Bentley, Lead Data Scientist at Audacia.

Navigating AI’s Expanding Landscape

Increasingly, AI is being woven into the fabric of modern engineering. Whether it’s enterprise models like ChatGPT, off-the-shelf cloud tools or bespoke machine learning pipelines.

However, as AI capabilities grow, so does the chance of unintended consequences: discrimination, security vulnerabilities or even loss of control over powerful systems. The solution is to ensure governance is considered at every part of the pipeline; it should be a robust, evolving framework grounded in clear principles, backed by thoughtful policies and shaped by the right people – enabling them to take ownership and drive responsible outcomes.

This article sets out a practical foundation for technology leaders looking to implement or update AI governance.

What We Mean by AI (And Why It Matters for Governance)

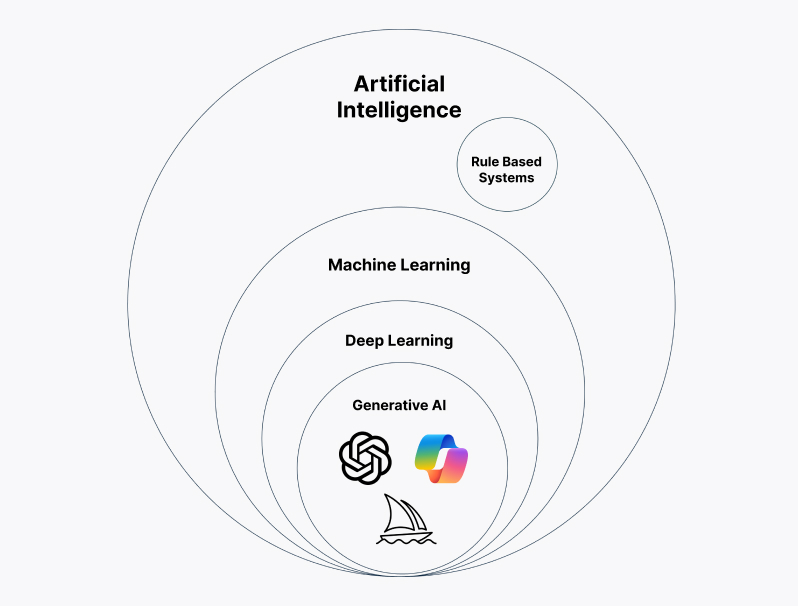

Before discussing governance, it helps to define what we mean by “AI.”

- Rule-based systems: The earliest AI was entirely programmatic – explicit rules codified by humans to mimic decision-making in well-understood domains.

- Machine learning: A huge leap forward. Algorithms learn patterns from data to make predictions or decisions without explicitly coded rules for every scenario.

- Deep learning: A subset of machine learning that uses multi-layered neural networks to capture complex patterns in vast datasets.

- Generative AI: At the innermost core sits generative AI. Deep learning models trained on massive datasets to produce new content – text, code, images or audio.

Most of the governance debate today is triggered by generative AI. However, in practice, governance concerns apply across AI in all its forms – not just generative AI. Whether you’re building a tailored fraud detection model or experimenting with ChatGPT prompts, the same foundational risks around ethics, security and control still apply.

A Working Definition of AI Governance

At its heart, AI governance is a framework of guidelines, processes and practices to ensure AI systems are:

- Ethical: respecting human values and avoiding harm

- Safe: robust, reliable, aligned with your organisation’s attitude to risk

- Transparent: open to inspection, traceable and explainable

And crucially, governance should span the full lifecycle – from initial scoping and development to deployment, monitoring and daily use.

In a simple analogy, AI governance is like the rules of the road. Your business context sets the landscape, your developers are the drivers, and AI is the vehicle. Governance provides the signposts, traffic lights and certifications to ensure you reach your destination (the use case) safely – without crashing the system or harming bystanders along the way.

Why Governance Matters More Than Ever

Ignoring AI governance comes with very real consequences:

- Zillow: Their machine learning system for home buying was trained on outdated market data. Without ongoing governance to detect drift or continuously fine tune the model with new data, the model consistently overbid, racking up losses of over $500 million and forcing layoffs and program shutdowns. (InsideAI News, 2021)

- Samsung: Engineers pasted proprietary code into ChatGPT to debug problems, unaware of the implications. The result was uncontrolled exposure of intellectual property, forcing an emergency ban on AI use. (Forbes, 2023)

Additionally, a UK poll revealed one in five companies experienced data leaks due to ungoverned GenAI use. Meanwhile, 92% of Fortune 500 firms already use ChatGPT – sometimes through informal “shadow AI”, where employees independently adopt tools without IT or legal signoff. (Reuters, 2024)

Governance isn’t there to slow teams down. It’s your best route to:

- Build trust and adoption, internally and with customers

- Mitigate operational, legal and financial risk

- Ensure your AI systems are auditable, reproducible and scalable

- Shorten time to production through clear, standardised practices

- Attract top technical talent who care about ethical, forward-thinking engineering.

Core Principles: The Ethical Backbone

The starting point for any governance framework is a set of core principles. These are underlying high-level ethical and operational guidelines – your non-negotiables for how AI gets built and used.

Here are six example principles split into two core areas:

Oversight & Integrity

- Accountability: Define clear roles and ownership for AI usage. Know who’s responsible for model outcomes and empower leaders to take corrective action.

- Ethics: Align AI with moral and societal values. Actively guard against bias or discriminatory outcomes.

- Transparency: Make your AI systems understandable. This allows effective auditing and builds technical literacy across teams.

User Rights & Protection

- Security: Guard against unauthorised access and misuse. Protect systems from compromise.

- Privacy: Safeguard personal and sensitive data. Stay compliant with GDPR and evolving global standards.

- Control: Give users and your organisation the means to override or restrict AI outputs to stay aligned with human judgement.

These principles are deliberately broad, they form the basis of many governance policies, which are then narrowed down with specific organisational context.

How Governance Changes Shape Up the Stack

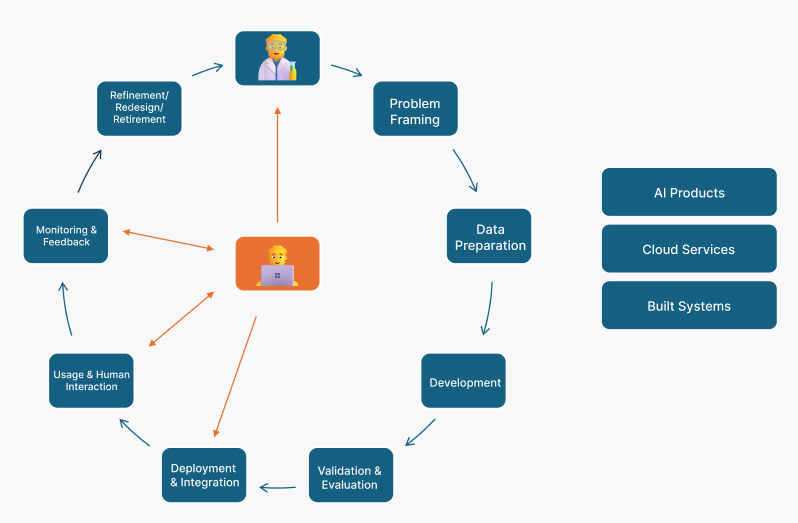

Governance doesn’t look the same at every level. Consider a typical AI project lifecycle with your users/project managers at the centre, and your engineers/data scientists embedded in the process:

We can approach this lifecycle at three different levels:

- Built systems: Built from scratch by data science teams. Here governance focuses on model development standards, data selection (to mitigate bias or toxicity) and hands-on monitoring.

- Cloud services: Plug-and-play frameworks where your team provides data and tweaks. You’re responsible for due diligence on service choice, providing clean data and verifying outputs comply with standards.

- AI products: Tools like Copilot or ChatGPT. Governance shifts to vetting vendors, understanding their transparency commitments and educating employees on approved use.

Governance doesn’t diminish as we move up the stack, it simply changes shape.

Turning Principles into Policy

So how do you move from abstract principles to something actionable?

A practical first step is an overarching AI governance policy document, tailored to your existing organisation. This might include:

- A formal statement of your principles

- Tables of roles & responsibilities

- Clear implementation guides with examples

- Checklists for assessing risks and impacts before adoption

- Standards for monitoring, auditing and escalation paths

Good policy documents are clear, accessible and dynamic. Additionally, avoid over-complication that restricts workflows and innovation – this massively reduces the likelihood of widespread adoption.

Mitigating the Biggest Risks

Build policies that actively spot and reduce risks. Examples include:

- Shadow AI exposure: Keep a registry of approved (and banned) tools. Provide sanctioned alternatives – e.g. enterprise-grade ChatGPT – to steer teams away from unsafe workarounds.

- Model drift & stale data: As Zillow discovered, failing to monitor changing data can be ruinous. Bake regular model reviews into your policy.

- Sensitive inputs: Guardrails (both technical and policy-based) to stop developers pasting IP into consumer SaaS tools.

- Privacy leaks: Ensure privacy reviews are a standard step in your deployment process.

Consider introducing lightweight artifacts like audit checklists or readiness questionnaires. You may want to build these into your governance policy document or introduce them as separate tools to keep them more dynamic. These integrate governance without adding heavy process that stifles engineering momentum.

People: The True Drivers of Governance

No policy lives in a vacuum. Successful AI governance comes down to people.

- Dedicated roles: Many organisations appoint an AI governance lead – often a data scientist or architect with a passion for responsible AI. They bridge executive strategy and daily developer practice.

- Defined responsibilities: Make sure everyone knows how governance relates to their role, and who to go to with questions.

- Periodic training: Keep teams current on new models, new regulations, and what policies mean for their work.

- Open culture: Foster spaces to raise concerns, suggest improvements and discuss AI ethics. This not only improves adoption – it makes your governance framework stronger and more relevant.

Staying Dynamic in a Fast-Moving World

AI is developing rapidly – it took ChatGPT two months to hit 100 million users, the fastest ever user growth for a consumer application. (The Guardian, 2023) Meanwhile, model sizes are growing by orders of magnitude, costs to train are plummeting and multi-agent systems are pushing us closer to artificial general intelligence.

In practice, this means:

- Review your policies often: use strict version control, iterate quickly.

- Monitor your deployed systems: stay alert for unanticipated changes, especially if using enterprise models updated outside your control.

- Keep learning: from regulatory frameworks (like the UK’s pro-innovation principles or ISO 42001) to evolving global standards, staying informed is essential.

Where to Start (Wherever You Are)

Not every organisation is at the same stage. If you’re only just exploring AI:

- Audit your workflows. Where could AI realistically help? Is shadow AI already creeping in?

- Use this to shape your first minimal governance guardrails.

If you’re sporadically using AI via contractors or pilot projects:

- Identify who might lead your governance efforts. Do you have data specialists who can step up? What lessons from past projects can be formalised into your first policies?

If you’re mature in AI use but light on governance:

- Start documenting your implicit standards. Turn them into explicit, auditable principles and policies. Avoid slowing innovation but build the right checks so your systems stay robust and ethical.

In Closing

AI governance boils down to three pillars: policies, principles and people. Get them right and you’re unlocking AI’s potential in a way that’s safe and aligned with your values.